Dify

- 运维

- 2025-04-12

- 216热度

- 0评论

-

简要介绍

- Dify 是一个开源的 LLM 应用开发平台。其直观的界面结合了 AI 工作流、RAG 管道、Agent、模型管理、可观测性功能等,让您可以快速从原型到生产。

- 仓库地址

https://github.com/langgenius/dify - 文档地址

https://docs.dify.ai/v/zh-hans - 项目主要目录结构

dify/ ├── AUTHORS ├── CONTRIBUTING.md ├── docker-legacy ├── docker ├── README.md ├── api │ ├── Dockerfile │ ├── poetry.lock │ ├── poetry.toml │ ├── pyproject.toml │ └── ... └── web │ ├── Dockerfile │ ├── ... │ └── yarn.lock └── ... -

部署说明

- 本章主要适用于对 dify 进行二开的部署,并将 Dify 交付到 Kubernetes 集群

- 如果是个人适用,建议直接适用官方提供的 docker-compose.yaml 一键拉起

- 发版比较频繁,在没有对其代码进行二开前,重复下面的步骤可以及时更新到官方最新版

6.1 构建前端镜像

6.1.1 Yarn.lock

-

操作如下

- 主要是更换

web/yarn.lock文件的源位国内的,下面使用的是阿里云的源

sed -i 's#registry.npmjs.org#registry.npmmirror.com#g' web/yarn.lock sed -i 's#registry.yarnpkg.com#registry.npmmirror.com#g' web/yarn.lock - 主要是更换

6.1.2 Dockerfile

-

操作如下

Dockerfile_web项目根目录创建,用于构建前端服务镜像。改自 web/Dockerfile 更换了自定义基础镜像内容如下

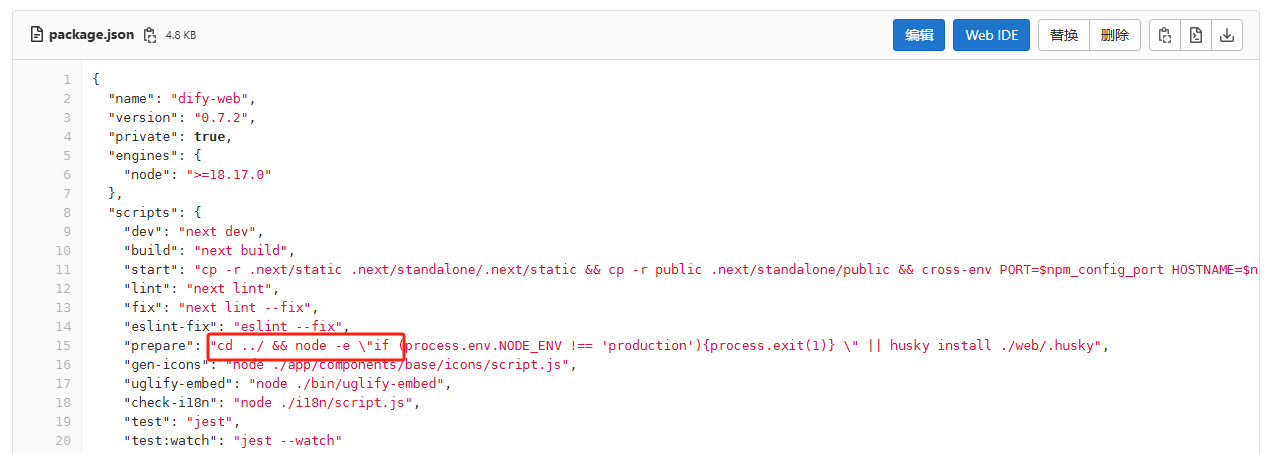

# base image FROM hukanfa/nodejs:v20.11.0-rockylinux9.3 AS base LABEL maintainer="1148389663@qq.com" # if you located in China, you can use aliyun mirror to speed up # RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories RUN yum -y install tzdata # install packages FROM base AS packages RUN mkdir -p /app COPY .git /app/.git WORKDIR /app/web COPY web/package.json . COPY web/yarn.lock . # if you located in China, you can use taobao registry to speed up # RUN yarn install --frozen-lockfile --registry https://registry.npmmirror.com/ RUN ls -la && yarn config set registry https://registry.npmmirror.com/ && yarn install --frozen-lockfile # build resources FROM base AS builder WORKDIR /app/web COPY --from=packages /app/web/ . COPY web/ . RUN ls -la && yarn build # production stage FROM base AS production ENV NODE_ENV=production ENV EDITION=SELF_HOSTED ENV DEPLOY_ENV=PRODUCTION ENV CONSOLE_API_URL=http://127.0.0.1:5001 ENV APP_API_URL=http://127.0.0.1:5001 ENV PORT=3000 ENV NEXT_TELEMETRY_DISABLED=1 # set timezone ENV TZ="Asia/Shanghai" RUN ln -snf /usr/share/zoneinfo/${TZ} /etc/localtime && echo ${TZ} > /etc/timezone # global runtime packages RUN yarn global add pm2 && yarn cache clean WORKDIR /app/web COPY --from=builder /app/web/public ./public COPY --from=builder /app/web/.next/standalone ./ COPY --from=builder /app/web/.next/static ./.next/static COPY web/docker/pm2.json ./pm2.json COPY web/docker/entrypoint.sh ./entrypoint.sh ARG COMMIT_SHA ENV COMMIT_SHA=${COMMIT_SHA} EXPOSE 3000 ENTRYPOINT ["/bin/sh", "./entrypoint.sh"]- 直接使用

web/Dockerfile原始文件也行,但需要将该文件复制到上级(根)目录下执行,因为 web/package.json 文件中有引用到上级目录的文件,但这样是不在 Dockerfile 的上下文范围内,构建不了

- 构建镜像

# 项目根目录下执行 docker build -t hukanfa/dify-web:v0.8.0-rockylinux9.3 -f Dockerfile_web .

6.1.3 自定义nodejs镜像

-

操作如下

- 根据前面介绍,原 Dockerfile 文件可以直接拿来用的,但需要改下使用国内安装源,如阿里云或者清华等源

- 下面是以

rockylinux操作系统制作nodejs 的dockerfile 文件内容

FROM rockylinux:9.3 # 前置定义 USER root WORKDIR /app # 安装基础命令 和 python 环境 RUN yum -y install vim telnet which net-tools unzip gawk zip wget iputils procps sudo xz git # 安装python RUN yum -y install python3 python3-pip && rm -f /usr/bin/python && ln -s /usr/bin/python3 /usr/bin/python && rm -f /usr/bin/pip && ln -s /usr/bin/pip3 /usr/bin/pip # 声明环境变量 ENV NODE_VERSION="v20.11.0" NODE_HOME=/usr/local/node # 安装node RUN wget -q https://npmmirror.com/mirrors/node/${NODE_VERSION}/node-${NODE_VERSION}-linux-x64.tar.xz && tar -xf node-${NODE_VERSION}-linux-x64.tar.xz -C /usr/local/ && mv /usr/local/node-${NODE_VERSION}-linux-x64 ${NODE_HOME} && rm -rf node-${NODE_VERSION}-linux-x64.tar.xz # 声明全局 ENV PATH=${NODE_HOME}/bin:$PATH # 安装 yarn , 依赖node RUN npm config set registry https://registry.npmmirror.com/ && npm install -g yarn && yarn config set registry https://registry.npmmirror.com- 构建镜像

docker build -t hukanfa/nodejs:v20.11.0-rockylinux9.3 .

6.2 构建后端镜像

6.2.1 下载 nltk 包

-

操作如下

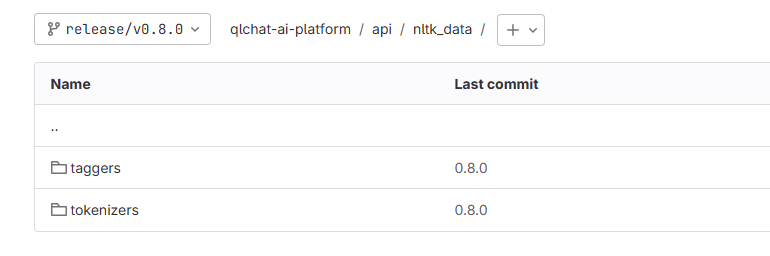

- 在项目

api/Dockerfile配置中,需要安装 nltk 相关依赖,但默认下载地址是外网一般会失败。

# Download nltk data RUN python -c "import nltk; nltk.download('punkt'); nltk.download('averaged_perceptron_tagger')"- 下载地址

https://github.com/nltk/nltk_data,下载以下文件即可

# packages/taggers averaged_perceptron_tagger.xml averaged_perceptron_tagger.zip # packages/tokenizers punkt.xml punkt.zip- 在项目 api 目录下创建文件夹 nltk_data ,将上述文件存放到该目录下。相关路径和文件夹命名不能改,如下图

- 最好将改文件添加到仓库并推送更新

- 在项目

6.2.2 Dockerfile

-

操作如下

- 本次构建主要是更新

api/pyproject.toml和api/poetry.lock的相关源为国内源。Dockerfile 内容如下

# base image FROM python:3.10-slim-bookworm AS base WORKDIR /app/api # Install Poetry ENV POETRY_VERSION=1.8.3 # if you located in China, you can use aliyun mirror to speed up # RUN pip install --no-cache-dir poetry==${POETRY_VERSION} -i https://mirrors.aliyun.com/pypi/simple/ RUN pip install --no-cache-dir poetry==${POETRY_VERSION} -i https://pypi.tuna.tsinghua.edu.cn/simple/ # Configure Poetry ENV POETRY_CACHE_DIR=/tmp/poetry_cache ENV POETRY_NO_INTERACTION=1 ENV POETRY_VIRTUALENVS_IN_PROJECT=true ENV POETRY_VIRTUALENVS_CREATE=true ENV POETRY_REQUESTS_TIMEOUT=15 FROM base AS packages # if you located in China, you can use aliyun mirror to speed up # RUN sed -i 's@deb.debian.org@mirrors.aliyun.com@g' /etc/apt/sources.list.d/debian.sources RUN sed -i 's@deb.debian.org@mirrors.tuna.tsinghua.edu.cn@g' /etc/apt/sources.list.d/debian.sources && apt-get update && apt-get install -y --no-install-recommends gcc g++ libc-dev libffi-dev libgmp-dev libmpfr-dev libmpc-dev # Install Python dependencies COPY pyproject.toml poetry.lock ./ # 重点看这里,第一次构建先把下面前三行注释打开,第四行注释。二次及以后的构建操作反之 RUN poetry source add --priority=primary mirrors https://pypi.tuna.tsinghua.edu.cn/simple/ && poetry lock --no-update && poetry install --sync --no-cache --no-root # RUN poetry install --sync --no-cache --no-root # production stage FROM base AS production ENV FLASK_APP=app.py ENV EDITION=SELF_HOSTED ENV DEPLOY_ENV=PRODUCTION ENV CONSOLE_API_URL=http://127.0.0.1:5001 ENV CONSOLE_WEB_URL=http://127.0.0.1:3000 ENV SERVICE_API_URL=http://127.0.0.1:5001 ENV APP_WEB_URL=http://127.0.0.1:3000 EXPOSE 5001 # set timezone ENV TZ=UTC WORKDIR /app/api RUN sed -i 's@deb.debian.org@mirrors.tuna.tsinghua.edu.cn@g' /etc/apt/sources.list.d/debian.sources && apt-get update && apt-get install -y --no-install-recommends curl nodejs libgmp-dev libmpfr-dev libmpc-dev # if you located in China, you can use aliyun mirror to speed up # && echo "deb http://mirrors.aliyun.com/debian testing main" > /etc/apt/sources.list && echo "deb http://mirrors.tuna.tsinghua.edu.cn/debian testing main" > /etc/apt/sources.list && apt-get update # For Security && apt-get install -y --no-install-recommends zlib1g=1:1.3.dfsg+really1.3.1-1 expat=2.6.3-1 libldap-2.5-0=2.5.18+dfsg-3 perl=5.38.2-5 libsqlite3-0=3.46.0-1 && apt-get autoremove -y && rm -rf /var/lib/apt/lists/* # Copy Python environment and packages ENV VIRTUAL_ENV=/app/api/.venv COPY --from=packages ${VIRTUAL_ENV} ${VIRTUAL_ENV} ENV PATH="${VIRTUAL_ENV}/bin:${PATH}" # Copy source code 这里额外保存了上个阶段的 poetry.lock 到 /app 因为它是最后想要的 COPY --from=packages /app/api/poetry.lock /app COPY . /app/api/ # Download nltk data RUN mv /app/api/nltk_data /root && python -c "import nltk; nltk.download('punkt'); nltk.download('averaged_perceptron_tagger')" # Copy entrypoint COPY docker/entrypoint.sh /entrypoint.sh RUN chmod +x /entrypoint.sh ARG COMMIT_SHA ENV COMMIT_SHA=${COMMIT_SHA} ENTRYPOINT ["/bin/bash", "/entrypoint.sh"]- 构建镜像

# 这一次构建耗时相对较久,特别是 poetry lock --no-update 阶段 docker build -t hukanfa/dify-api:v0.8.0 .- 成功完成构建后,根据此镜像创建容器,进一步获取更新后的

api/pyproject.toml和api/poetry.lock文件

# 运行 docker run -itd --name dify-api hukanfa/dify-api:v0.8.0 # 拷贝上述文件到当前目录 docker cp dify-api:/app/poetry.lock . docker cp dify-api:/app/api/pyproject.toml .- 更新仓库

1 先删除api/pyproject.toml 和 api/poetry.lock 并执行一次推送更新 2 将上述下载的文件重新添加到对应路径下,在执行一次更新推送- 至此,已经成功完成上述文件的国内源更换操作。虽然有点繁琐但它是最正确的更换方式,经验之谈!

- 本次构建主要是更新

-

Dockerfile 二次调整

- 前面提到,需调整 Dockerfile 配置中以下内容并执行推送更新到仓库

# 重点看这里,第一次构建先把下面前三行注释打开,第四行注释。二次及以后的构建操作反之 #RUN poetry source add --priority=primary mirrors https://pypi.tuna.tsinghua.edu.cn/simple/ && # poetry lock --no-update && # poetry install --sync --no-cache --no-root RUN poetry install --sync --no-cache --no-root- 重新构建镜像

docker build -t hukanfa/dify-api:v0.8.0 .

6.3 基础服务

-

说明

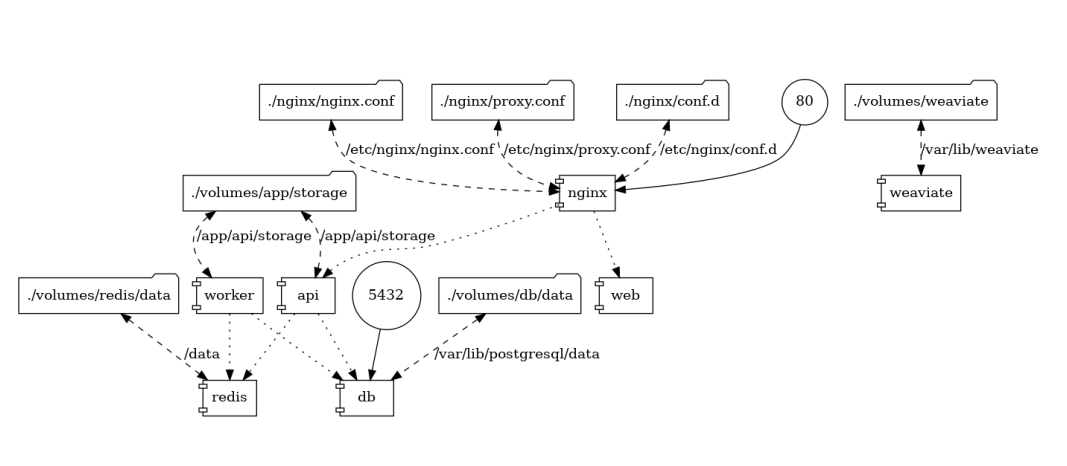

- 本节主要介绍 dify 涉及的基础服务部署,主要有

1、存储数据库 postgres 2内存数据库 redis 3 向量数据库 weaviate 4 沙箱 dify-sandbox 5 ssrf代理 squid- 官方的

docker/docker-compose.yaml中还涉及到其他组件和数据库,除了上述列出的其他可作为可选项 - 以下是官方提供的访问链路图,可以看出只需要部署上述列出的基础服务即可

6.3.1 Postgres

-

操作如下

- 目录结构

dify-postgres/ ├── docker-compose.yaml └── pgsql.envpgsql.env

PGUSER=postgres POSTGRES_PASSWORD=difyai123456 POSTGRES_DB=dify PGDATA=/var/lib/postgresql/data/pgdata POSTGRES_MAX_CONNECTIONS=100 POSTGRES_SHARED_BUFFERS=128MB POSTGRES_WORK_MEM=4MB POSTGRES_MAINTENANCE_WORK_MEM=64MB POSTGRES_EFFECTIVE_CACHE_SIZE=4096MB TZ=Asia/Shanghaidocker-compose.yaml

version: '3.5' services: difydb: image: postgres:15-alpine container_name: difydb-pgsql restart: always env_file: - ./pgsql.env volumes: - ./data:/var/lib/postgresql/data ports: - "5434:5432" healthcheck: test: [ "CMD", "pg_isready" ] interval: 1s timeout: 3s retries: 30- 启动服务

docker-compose up -d

6.3.2 Redis

-

操作如下

- 目录结构

dify-redis/ ├── conf │ └── redis.conf └── docker-compose.yamlredis.conf

port 6379 bind 0.0.0.0 requirepass 25ensjk3LjoPJ daemonize no loglevel notice databases 16 appendonly yes appendfilename "appendonly.aof" appendfsync always save 900 1 save 300 10 save 60 10000 dbfilename dump.rdb dir /data maxmemory 1g maxmemory-policy allkeys-lrudocker-compose.yaml

version: "3.5" services: redis: image: redis:6-alpine container_name: dify-redis6 restart: always volumes: - ./conf:/opt/config - ./data:/data command: redis-server /opt/config/redis.conf ports: - "6389:6379" healthcheck: test: [ "CMD", "redis-cli", "ping" ]- 启动服务

docker-compose up -d

6.3.3 Weaviate

-

操作如下

- 目录结构

dify-weaviate/ ├── docker-compose.yaml └── weaviate.envweaviate.env

# You can generate a strong key using `openssl rand -base64 42` PERSISTENCE_DATA_PATH=/var/lib/weaviate QUERY_DEFAULTS_LIMIT=25 AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED=false DEFAULT_VECTORIZER_MODULE=none CLUSTER_HOSTNAME=node1 AUTHENTICATION_APIKEY_ENABLED=true AUTHENTICATION_APIKEY_ALLOWED_KEYS=WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih AUTHENTICATION_APIKEY_USERS=super@qlchat.com AUTHORIZATION_ADMINLIST_ENABLED=true AUTHORIZATION_ADMINLIST_USERS=super@qlchat.comdocker-compose.yaml

version: '3.5' services: weaviate: image: semitechnologies/weaviate:1.19.0 container_name: dify-weaviate restart: always volumes: - ./data:/var/lib/weaviate env_file: - ./weaviate.env ports: - "5435:8080"- 启动服务

docker-compose up -d

6.3.4 Sanbox&SsrfProxy

-

操作如下

- 目录结构

dify-sanbox-ssrf/ ├── docker-compose.yaml ├── sanbox.env ├── ssrf_proxy │ ├── docker-entrypoint.sh │ └── squid.conf.template └── ssrfproxy.envsanbox.env

# You can generate a strong key using `openssl rand -base64 42` API_KEY=ZHN7IOQKwvHSNqshQnADT2CwfGL1vAw8twXF+yucVlAeVBsU9fU0/6Zr GIN_MODE=release WORKER_TIMEOUT=15 ENABLE_NETWORK=true HTTP_PROXY=http://ssrf_proxy:3128 HTTPS_PROXY=http://ssrf_proxy:3128 SANDBOX_PORT=8194ssrfproxy.env

HTTP_PORT=3128 COREDUMP_DIR=/var/spool/squid REVERSE_PROXY_PORT=8194 SANDBOX_HOST=sandbox SANDBOX_PORT=8194ssrf_proxy相关文件

复制项目 docker/ssrf_proxy 目录及其下面文件到此即可docker-compose.yaml

version: '3.5' services: sandbox: image: langgenius/dify-sandbox:0.2.7 container_name: dify-sanbox restart: always env_file: - ./sanbox.env volumes: - ./sandbox/dependencies:/dependencies ports: - 8194:8194 ssrf_proxy: image: ubuntu/squid:latest container_name: dify-ssrf-proxy restart: always env_file: - ./ssrfproxy.env volumes: - ./ssrf_proxy/squid.conf.template:/etc/squid/squid.conf.template - ./ssrf_proxy/docker-entrypoint.sh:/docker-entrypoint-mount.sh entrypoint: [ "sh", "-c", "cp /docker-entrypoint-mount.sh /docker-entrypoint.sh && sed -i 's/r$$//' /docker-entrypoint.sh && chmod +x /docker-entrypoint.sh && /docker-entrypoint.sh" ] ports: - 3128:3128- 启动服务

docker-compose up -d

6.4 前后端交付 K8s

-

说明

- 经过前面小节的准备工作,现已具备将dify前后端交付到kubernetes集群的条件

- 交付具体内容

# 前后端 1 dify-api 2 dify-worker 3 dify-web

6.4.1 前置配置

-

操作如下

namespace.yaml创建名称空间

apiVersion: v1 kind: Namespace metadata: name: difysecrets.yaml创建加密存储

# check Base64 online https://base64.us/ apiVersion: v1 kind: Secret metadata: name: dify-credentials namespace: dify data: # Base64 encoded postgres username, default is postgres pg-username: cG9zdGdyZXM= # Base64 encoded postgres password, default is difyai123456 pg-password: ZGlmeWFpMTIzNDU2 # Base64 encoded postgres host, default is 192.168.26.23 pg-host: MTkyLjE2OC4yNi4yMw== # Base64 encoded postgres port 5434 pg-port: NTQzNA== # Base64 encoded redis username, default is empty redis-username: '' # Base64 encoded redis password, default is 25ensjk3LjoPJ redis-password: MjVlbnNqazNMam9QSg== # Base64 encoded redis host, default is 192.168.26.23 redis-host: MTkyLjE2OC4yNi4yMw== # Base64 encoded redis port 6389 redis-port: NjM4OQ== # Base64 encoded weaviate host, default is 192.168.26.23 weaviate-host: MTkyLjE2OC4yNi4yMw== # Base64 encoded weaviate port 5435 weaviate-port: NTQzNQ== # API SECRET_KEY 4PuhFCyu0CYtoXnAT7aca+Jk1I1Dt9R85EBqq+q6khKOPiiwBQIosz0D sk: NFB1aEZDeXUwQ1l0b1huQVQ3YWNhK0prMUkxRHQ5Ujg1RUJxcStxNmtoS09QaWl3QlFJb3N6MEQ= # INIT_PASSWORD As123456! init-passwd: QXMxMjM0NTYh # WEAVIATE_API_KEY WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih weaviate-api-key: V1ZGNVlUaGFIbGtZd2hHVVNtQ1Jnc1gzdEQ1bmdkTjhwa2lo # CODE_EXECUTION_API_KEY by sanbox ZHN7IOQKwvHSNqshQnADT2CwfGL1vAw8twXF+yucVlAeVBsU9fU0/6Zr sanbox-api-key: WkhON0lPUUt3dkhTTnFzaFFuQURUMkN3ZkdMMXZBdzh0d1hGK3l1Y1ZsQWVWQnNVOWZVMC82WnI= type: Opaqueservices.yaml

--- # DIFY API SERVICE apiVersion: v1 kind: Service metadata: name: dify-api namespace: dify spec: ports: - port: 5001 targetPort: 5001 protocol: TCP name: dify-api type: ClusterIP selector: app: dify-api --- # DIFY WORKER SERVICE apiVersion: v1 kind: Service metadata: name: dify-worker namespace: dify spec: ports: - protocol: TCP port: 5001 targetPort: 5001 selector: app: dify-worker type: ClusterIP --- # DIFY WEB SERVICE kind: Service apiVersion: v1 metadata: name: dify-web namespace: dify spec: selector: app: dify-web type: ClusterIP ports: - name: dify-web port: 3000 targetPort: 3000

6.4.2 交付后端

-

操作如下

deploy-api.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: dify-api labels: app.kubernetes.io/instance: dify-api app: dify-api namespace: dify spec: replicas: 1 minReadySeconds: 10 serviceName: dify-api selector: matchLabels: app: dify-api template: metadata: labels: app: dify-api spec: imagePullSecrets: - name: vpc-dify-registry containers: - name: dify-api image: hukanfa/dify-api:v0.8.0 env: - name: MODE value: api - name: LOG_LEVEL value: DEBUG - name: BASE_HOST value: 192.168.26.23 - name: CONSOLE_WEB_URL value: '' - name: CONSOLE_API_URL value: '' - name: SERVICE_API_URL value: '' - name: APP_WEB_URL value: '' - name: FILES_URL value: '' - name: MIGRATION_ENABLED value: 'true' - name: INIT_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: init-passwd - name: SECRET_KEY valueFrom: secretKeyRef: name: dify-credentials key: sk - name: DB_USERNAME valueFrom: secretKeyRef: name: dify-credentials key: pg-username - name: DB_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: pg-password - name: DB_HOST valueFrom: secretKeyRef: name: dify-credentials key: pg-host - name: DB_PORT valueFrom: secretKeyRef: name: dify-credentials key: pg-port - name: DB_DATABASE value: dify - name: REDIS_HOST valueFrom: secretKeyRef: name: dify-credentials key: redis-host - name: REDIS_PORT valueFrom: secretKeyRef: name: dify-credentials key: redis-port # default redis username is empty - name: REDIS_USERNAME valueFrom: secretKeyRef: name: dify-credentials key: redis-username - name: REDIS_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: redis-password - name: REDIS_USE_SSL value: 'false' - name: REDIS_DB value: '0' - name: CELERY_BROKER_URL value: >- redis://$(REDIS_USERNAME):$(REDIS_PASSWORD)@$(REDIS_HOST):$(REDIS_PORT)/1 - name: WEB_API_CORS_ALLOW_ORIGINS value: '*' - name: CONSOLE_CORS_ALLOW_ORIGINS value: '*' - name: STORAGE_TYPE value: '*' - name: STORAGE_LOCAL_PATH value: /app/api/storage - name: VECTOR_STORE value: weaviate - name: WEAVIATE_HOST valueFrom: secretKeyRef: name: dify-credentials key: weaviate-host - name: WEAVIATE_PORT valueFrom: secretKeyRef: name: dify-credentials key: weaviate-port - name: WEAVIATE_ENDPOINT value: http://$(WEAVIATE_HOST):$(WEAVIATE_PORT) - name: WEAVIATE_API_KEY valueFrom: secretKeyRef: name: dify-credentials key: weaviate-api-key - name: CODE_EXECUTION_ENDPOINT value: http://$(BASE_HOST):8194 - name: CODE_EXECUTION_API_KEY valueFrom: secretKeyRef: name: dify-credentials key: sanbox-api-key - name: CODE_MAX_NUMBER value: '9223372036854775807' - name: CODE_MIN_NUMBER value: '-9223372036854775808' - name: CODE_MAX_STRING_LENGTH value: '80000' - name: TEMPLATE_TRANSFORM_MAX_LENGTH value: '80000' - name: CODE_MAX_STRING_ARRAY_LENGTH value: '30' - name: CODE_MAX_OBJECT_ARRAY_LENGTH value: '30' - name: CODE_MAX_NUMBER_ARRAY_LENGTH value: '1000' - name: INDEXING_MAX_SEGMENTATION_TOKENS_LENGTH value: '1000' - name: SSRF_PROXY_HTTP_URL value: 'http://$(BASE_HOST):3128' - name: SSRF_PROXY_HTTPS_URL value: 'http://$(BASE_HOST):3128' resources: requests: cpu: 200m memory: 256Mi limits: cpu: 1000m memory: 2Gi ports: - containerPort: 5001 imagePullPolicy: IfNotPresent volumeMounts: - name: dify-api-storage mountPath: /app/api/storage volumes: - name: dify-api-storage nfs: server: 192.168.26.21 path: "/data/nfsDataShare/dify"deploy-worker.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: dify-worker namespace: dify labels: app: dify-worker app.kubernetes.io/instance: dify-worker spec: serviceName: 'dify-worker' replicas: 1 minReadySeconds: 10 selector: matchLabels: app: dify-worker template: metadata: labels: app: dify-worker spec: imagePullSecrets: - name: vpc-dify-registry restartPolicy: Always containers: - name: dify-worker image: hukanfa/dify-api:v0.8.0 env: - name: MODE value: worker - name: LOG_LEVEL value: INFO - name: BASE_HOST value: 192.168.26.23 - name: CONSOLE_WEB_URL value: '' - name: SECRET_KEY valueFrom: secretKeyRef: name: dify-credentials key: sk - name: DB_USERNAME valueFrom: secretKeyRef: name: dify-credentials key: pg-username - name: DB_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: pg-password - name: DB_HOST valueFrom: secretKeyRef: name: dify-credentials key: pg-host - name: DB_PORT valueFrom: secretKeyRef: name: dify-credentials key: pg-port - name: DB_DATABASE value: dify - name: REDIS_HOST valueFrom: secretKeyRef: name: dify-credentials key: redis-host - name: REDIS_PORT valueFrom: secretKeyRef: name: dify-credentials key: redis-port # default redis username is empty - name: REDIS_USERNAME valueFrom: secretKeyRef: name: dify-credentials key: redis-username - name: REDIS_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: redis-password - name: REDIS_USE_SSL value: 'false' - name: REDIS_DB value: '0' - name: CELERY_BROKER_URL value: >- redis://$(REDIS_USERNAME):$(REDIS_PASSWORD)@$(REDIS_HOST):$(REDIS_PORT)/1 - name: WEB_API_CORS_ALLOW_ORIGINS value: '*' - name: CONSOLE_CORS_ALLOW_ORIGINS value: '*' - name: STORAGE_TYPE value: '*' - name: STORAGE_LOCAL_PATH value: /app/api/storage - name: VECTOR_STORE value: weaviate - name: WEAVIATE_HOST valueFrom: secretKeyRef: name: dify-credentials key: weaviate-host - name: WEAVIATE_PORT valueFrom: secretKeyRef: name: dify-credentials key: weaviate-port - name: WEAVIATE_ENDPOINT value: http://$(WEAVIATE_HOST):$(WEAVIATE_PORT) - name: WEAVIATE_API_KEY valueFrom: secretKeyRef: name: dify-credentials key: weaviate-api-key - name: SSRF_PROXY_HTTP_URL value: 'http://$(BASE_HOST):3128' - name: SSRF_PROXY_HTTPS_URL value: 'http://$(BASE_HOST):3128' resources: requests: cpu: 200m memory: 256Mi limits: cpu: 1000m memory: 2Gi ports: - containerPort: 5001 imagePullPolicy: IfNotPresent volumeMounts: - name: dify-api-storage mountPath: /app/api/storage volumes: - name: dify-api-storage nfs: server: 192.168.26.21 path: "/data/nfsDataShare/dify"- 如需增加邮件配置,需额外增加以下环境变量

# 注意,用户的密码是配在保密字典中。MAIL_DEFAULT_SEND_FROM 只能是邮件格式 - name: MAIL_TYPE value: 'smtp' - name: MAIL_DEFAULT_SEND_FROM value: 'jishu.xxx@xxx.com' - name: SMTP_SERVER value: 'smtp.xxx.com' - name: SMTP_PORT value: '587' - name: SMTP_USERNAME value: 'jishu.xxx@xxx.com' - name: SMTP_PASSWORD valueFrom: secretKeyRef: name: dify-credentials key: mail-smtp-passwd - name: SMTP_USE_TLS value: 'false' - name: SMTP_OPPORTUNISTIC_TLS value: 'false'

6.4.3 交付前端

-

操作如下

deploy-web.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: dify-web namespace: dify labels: app: dify-web spec: replicas: 1 selector: matchLabels: app: dify-web template: metadata: labels: app: dify-web spec: imagePullSecrets: - name: vpc-dify-registry automountServiceAccountToken: false containers: - name: dify-web image: hukanfa/dify-web:v0.8.0-rockylinux9.3 env: - name: EDITION value: SELF_HOSTED - name: CONSOLE_API_URL value: "" - name: APP_API_URL value: "" resources: requests: cpu: 100m memory: 128Mi limits: cpu: 500m memory: 1Gi ports: - containerPort: 3000 imagePullPolicy: IfNotPresent

6.5 访问配置

-

操作如下

dify-ingress.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: labels: ingress-controller: nginx name: dify-ingress namespace: dify spec: ingressClassName: ack-nginx rules: - host: ai.hukanfa.com http: paths: - backend: service: name: dify-web port: number: 3000 path: / pathType: ImplementationSpecific - backend: service: name: dify-api port: number: 5001 path: /api pathType: ImplementationSpecific - backend: service: name: dify-api port: number: 5001 path: /v1 pathType: ImplementationSpecific - backend: service: name: dify-api port: number: 5001 path: /files pathType: ImplementationSpecific - backend: service: name: dify-api port: number: 5001 path: /console/api pathType: ImplementationSpecific status: loadBalancer: ingress: - ip: 192.168.2.11upstream.conf

upstream dify_ingress { server 192.168.2.11:80; }ai.hukanfa.com.confNginx 配置

# ai平台 server { listen 80; listen 443 ssl; server_name ai.hukanfa.com; ssl_certificate certs/hukanfa.com.crt; ssl_certificate_key certs/hukanfa.com.key; access_log logs/ai_access.log main; error_log logs/ai_error.log notice; location /robots.txt { root /usr/local/nginx/html; index index.html; } location /console/api { proxy_pass http://dify_ingress; include proxy_dify.conf; } location /api { proxy_pass http://dify_ingress; include proxy_dify.conf; } location /v1 { proxy_pass http://dify_ingress; include proxy_dify.conf; } location /files { proxy_pass http://dify_ingress; include proxy_dify.conf; } location / { proxy_pass http://dify_ingress; include proxy_dify.conf; } }proxy_dify.conf

# docker/nginx/proxy.conf.template proxy_set_header Host $host; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_http_version 1.1; proxy_set_header Connection ""; proxy_buffering off; proxy_read_timeout 3600s; proxy_send_timeout 3600s;- 访问界面

https://ai.hukanfa.com

6.6 版本升级

-

说明 (本地二开后,合并更新官方代码有风险,请做好相关备份)

- 主要介绍本地二开的代码如何同步官方最新版本

- 为保证操作一致性,请确保

Dify搭建是基于前面章节的步骤 - 本章示例 v0.8.0 升级到 v0.11.1

-

注意事项- 升级前,需要保存 flask db history 信息

# 进入容器 /app/api 目录下执行以下命令,保存该信息后续用到 flask db history

6.6.1 前置操作

-

操作如下

- 同步官方最新分支到本地仓库

1、私有代码仓库从 master 创建新分支 release/v0.11.1 2、拉取 release/v0.11.1 分支到本地 3、下载官方 main 分支zip包 https://github.com/langgenius/dify/tree/main 4、解压官方zip包并复制所有文件粘贴到本地分支目录下,替换所有 5、将所有更改推送到私有代码仓库 6、从 release/v0.11.1 创建新分支 f_update_v0.11.0 用于后续的适应性调整

6.6.2 前端调整

-

操作如下

yarn.lock替换源为国内加速地址或者自建的代理仓库

# 方法一:替换为阿里云 registry.npmmirror.com sed -i 's#registry.npmjs.org#registry.npmmirror.com#g' web/yarn.lock sed -i 's#registry.yarnpkg.com#registry.npmmirror.com#g' web/yarn.lock # 方法二:替换为自建仓库 http://nexus.xxx.com/repository/npm-group/ sed -i 's#https://registry.npmjs.org#http://nexus.xxx.com/repository/npm-group#g' web/yarn.lock sed -i 's#https://registry.yarnpkg.com#http://nexus.xxx.com/repository/npm-group#g' web/yarn.lock注意:如果是nexus创建的私仓需要用 http 协议Dockerfile

用项目目录下的 Dockerfile_web 即可,同时注意替换相关私仓时用 http协议,否则会报证书问题

6.6.3 后端调整

-

操作如下

api/Dockerfile

# base image # FROM python:3.10-slim-bookworm AS base FROM BASE_IMAGES AS base WORKDIR /app/api # Install Poetry ENV POETRY_VERSION=1.8.4 # if you located in China, you can use aliyun mirror to speed up # RUN pip install --no-cache-dir poetry==${POETRY_VERSION} -i https://mirrors.aliyun.com/pypi/simple/ RUN pip install --no-cache-dir poetry==${POETRY_VERSION} --trusted-host nexus.dachensky.com -i http://nexus.xxx.com/repository/python-group/simple/ # Configure Poetry ENV POETRY_CACHE_DIR=/tmp/poetry_cache ENV POETRY_NO_INTERACTION=1 ENV POETRY_VIRTUALENVS_IN_PROJECT=true ENV POETRY_VIRTUALENVS_CREATE=true ENV POETRY_REQUESTS_TIMEOUT=15 FROM base AS packages # if you located in China, you can use aliyun mirror to speed up # RUN sed -i 's@deb.debian.org@mirrors.aliyun.com@g' /etc/apt/sources.list.d/debian.sources RUN sed -i 's@deb.debian.org@mirrors.tuna.tsinghua.edu.cn@g' /etc/apt/sources.list.d/debian.sources && apt-get update && && apt-get install -y --no-install-recommends gcc g++ libc-dev libffi-dev libgmp-dev libmpfr-dev libmpc-dev # Install Python dependencies COPY pyproject.toml poetry.lock ./ #RUN poetry source add --priority=primary mirrors http://nexus.xxx.com/repository/python-group/simple/ && # poetry lock --no-update && # poetry install --sync --no-cache --no-root RUN poetry install --sync --no-cache --no-root # production stage FROM base AS production ENV FLASK_APP=app.py ENV EDITION=SELF_HOSTED ENV DEPLOY_ENV=PRODUCTION ENV CONSOLE_API_URL=http://127.0.0.1:5001 ENV CONSOLE_WEB_URL=http://127.0.0.1:3000 ENV SERVICE_API_URL=http://127.0.0.1:5001 ENV APP_WEB_URL=http://127.0.0.1:3000 EXPOSE 5001 # set timezone ENV TZ=UTC WORKDIR /app/api RUN sed -i 's@deb.debian.org@mirrors.tuna.tsinghua.edu.cn@g' /etc/apt/sources.list.d/debian.sources && apt-get update && apt-get install -y --no-install-recommends curl nodejs libgmp-dev libmpfr-dev libmpc-dev # if you located in China, you can use aliyun mirror to speed up # && echo "deb http://mirrors.aliyun.com/debian testing main" > /etc/apt/sources.list && echo "deb http://nexus.xxx.com/repository/apt-proxy-debian testing main" > /etc/apt/sources.list && apt-get update # For Security && apt-get install -y --no-install-recommends expat=2.6.3-2 libldap-2.5-0=2.5.18+dfsg-3+b1 perl=5.40.0-7 libsqlite3-0=3.46.1-1 zlib1g=1:1.3.dfsg+really1.3.1-1+b1 # install a chinese font to support the use of tools like matplotlib && apt-get install -y fonts-noto-cjk && apt-get autoremove -y && rm -rf /var/lib/apt/lists/* # Copy Python environment and packages ENV VIRTUAL_ENV=/app/api/.venv COPY --from=packages ${VIRTUAL_ENV} ${VIRTUAL_ENV} ENV PATH="${VIRTUAL_ENV}/bin:${PATH}" # Copy source code COPY --from=packages /app/api/poetry.lock /app COPY . /app/api/ # Download nltk data RUN mv /app/api/nltk_data /root && python -c "import nltk; nltk.download('punkt'); nltk.download('averaged_perceptron_tagger')" # Copy entrypoint COPY docker/entrypoint.sh /entrypoint.sh RUN chmod +x /entrypoint.sh ARG COMMIT_SHA ENV COMMIT_SHA=${COMMIT_SHA} ENTRYPOINT ["/bin/bash", "/entrypoint.sh"]- 更新库表结构

【请先备份】

# api/docker/entryopoint.sh 脚本中有以下判断 if [[ "${MIGRATION_ENABLED}" == "true" ]]; then echo "Running migrations" flask upgrade-db fi # 需要核实变量 MIGRATION_ENABLED 的值 1、docker部署则该变量在 docker/docker-compose.yaml中定义 MIGRATION_ENABLED: ${MIGRATION_ENABLED:-true} 2、源码部署则该变量在 docker/.env.example 。不过官方文档提到的是 api/.env.example 但该文件没有此变量,因此如果是用官方文档写的那份则还需要手动在api目录下执行 flask upgrade-db 3、kubernetes 交付的则需要在 deploy.yaml 模板中添加改变量设定,值为 trueapi/pyproject.toml

# 文本最后添加代理源,这里用的是私仓地址。国内可以用阿里或清华源 [[tool.poetry.source]] name = "mirrors" url = "http://nexus.xxx.com/repository/python-group/simple/" priority = "primary"api/poetry.lock

## 这个文件是在服务正常运行后,从容器中(/app/poetry.lock)copy过来,再更新到私有远程代码仓库 ## 获取更新后的文件则需要打开dockerfile中以下注释行,同时注释掉最后一行。当获取到新文件推送到远程私仓后反过来操作就行 #RUN poetry source add --priority=primary mirrors http://nexus.xxx.com/repository/python-group/simple/ && # poetry lock --no-update && # poetry install --sync --no-cache --no-root RUN poetry install --sync --no-cache --no-root

6.6.4 更新环境变量

-

操作如下

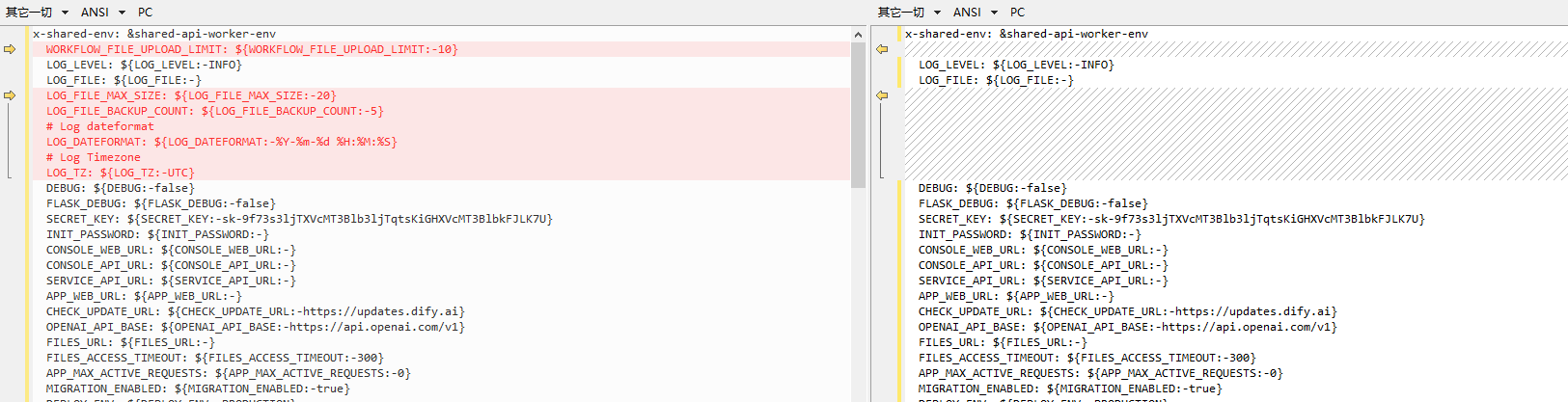

- 主要检查新旧版本的

docker/docker-compose.yaml中的环境变量是否有变化,变化的部分是否是必须要更新的

- 结合上述对比,需更新 api 和 worker 交付到 kubernetes 的 deploy.yaml 模板

# 新增以下配置 - name: WORKFLOW_FILE_UPLOAD_LIMIT value: '10' - name: LOG_FILE_MAX_SIZE value: '20' - name: ACCESS_TOKEN_EXPIRE_MINUTES value: '60' - name: LOG_FILE_BACKUP_COUNT value: '5' - name: LOG_DATEFORMAT value: '%Y-%m-%d %H:%M:%S' - name: LOG_TZ value: 'Asia/Shanghai' - name: PROMPT_GENERATION_MAX_TOKENS value: '512' - name: CODE_GENERATION_MAX_TOKENS value: '1024' - name: MULTIMODAL_SEND_VIDEO_FORMAT value: 'base64' - name: UPLOAD_VIDEO_FILE_SIZE_LIMIT value: '100' - name: UPLOAD_AUDIO_FILE_SIZE_LIMIT value: '50' - name: RESET_PASSWORD_TOKEN_EXPIRY_MINUTES value: '5' - name: WORKFLOW_MAX_EXECUTION_STEPS value: '500' - name: WORKFLOW_MAX_EXECUTION_TIME value: '1200' - name: WORKFLOW_CALL_MAX_DEPTH value: '5' - name: HTTP_REQUEST_NODE_MAX_BINARY_SIZE value: '10485760' - name: HTTP_REQUEST_NODE_MAX_TEXT_SIZE value: '1048576' - name: APP_MAX_EXECUTION_TIME value: '12000' - 主要检查新旧版本的

6.6.5 报错处理

6.6.5.1 库表更新迁移失败

-

操作如下

- 参考文章

# 主要介绍为什么会出现这种情况,但如何处理还是得看下面的分析,文章中的处理方式还是因地制宜吧 https://www.arundhaj.com/blog/multiple-head-revisions-present-error-flask-migrate.html https://blog.jerrycodes.com/multiple-heads-in-alembic-migrations/- 报错信息

## 报错:出现两个head分支 ERROR [flask_migrate] Error: Multiple head revisions are present for given argument 'head'; please specify a specific target revision, '<branchname>@head' to narrow to a specific head, or 'heads' for all heads- 获取冲突的 revision id

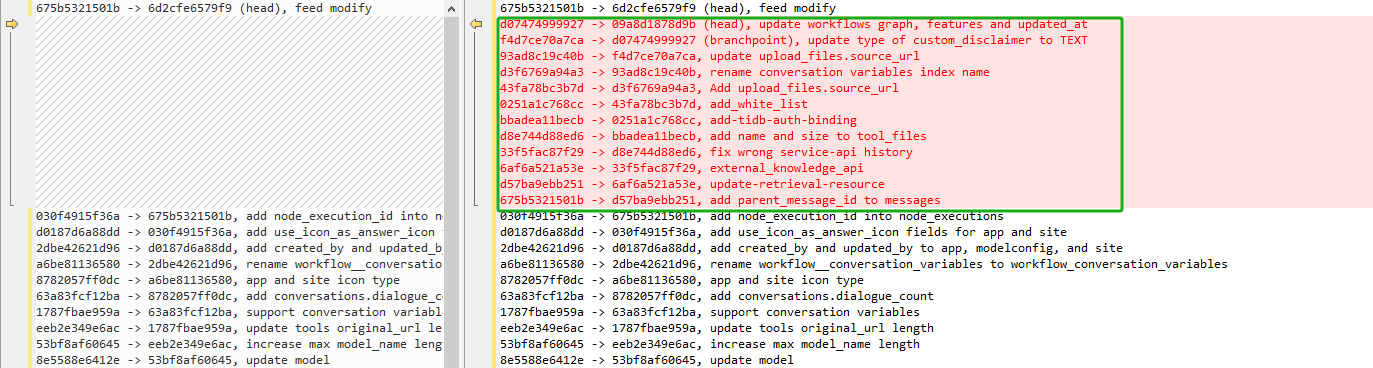

# 先在 api/docker/entrypoint.sh 中数据库更新操作前增加睡眠一定时间,留出操作时间 if [[ "${MIGRATION_ENABLED}" == "true" ]]; then sleep 300 echo "Running migrations" flask upgrade-db fi # 应用容器起来后,及时进入容器进行下面操作。执行以下命令可以看到有2个head的分支 flask db history

- 以上

history查看结果分析如下

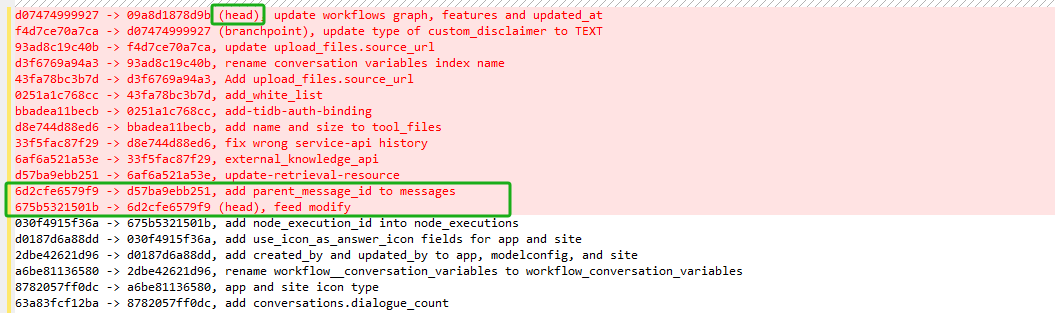

1、675b5321501b -> 6d2cfe6579f9 (head), feed modify 这个分支是本地二开时提交的 2、绿色框红色哪些分支是本次更新增加的 3、可以看出,官方始终维护属于它的分支,本地提交会看作是单独的额外分支 4、所以需要手动维护,把本地分支和官方的整合成一个分支,且这个操作在每次合并官方最新代码时都要操作一次 5、调整:6d2cfe6579f9 往下移,d57ba9ebb251 上一级改成 6d2cfe6579f9 调整后的分支关系如下图

- 当然,要真正实现上述的分支调整规需要更改以下文件

down_revision

## 文件路径 dify/api/migrations/versions 根据分支 id 搜索相关文件 # 带有 xxx6d2cfe6579f9xxx.py 修改如下 revision = '6d2cfe6579f9' down_revision = '675b5321501b' # 带有 xxxd57ba9ebb251xxx.py 修改如下 revision = 'd57ba9ebb251' down_revision = '6d2cfe6579f9'- 将以上调整保存推送到仓库再重新部署即可,请确保

MIGRATION_ENABLED变量值为 true

6.7 SandBox&Ssrf 交付 K8S (可选)

- 说明

- 在前面的基础服务章节中,已经介绍了如何通过 docker-compose 交付 Sandbox 和 Ssrf,但也仅用于测试环境

- 本章主要介绍如何将 Sandbox 和 Ssrf 交付到 Kubernetes 集群中,以便应对生产环境的弹性需求和提高使用体验

6.7.1 Sandbox

-

操作如下

configmap-sandbox.yaml

apiVersion: v1 kind: ConfigMap metadata: name: sandbox-config namespace: dify data: config.yaml: | app: port: 8194 debug: True key: dify-sandbox max_workers: 15 max_requests: 100 worker_timeout: 15 python_path: /usr/local/bin/python3 enable_network: True # please make sure there is no network risk in your environment enable_preload: False # please keep it as False for security purposes allowed_syscalls: # please leave it empty if you have no idea how seccomp works proxy: socks5: '' http: 'http://dify-ssrf:3128' https: 'http://dify-ssrf:3128'deployment-sandbox.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: dify-sandbox namespace: dify labels: app: dify-sandbox spec: replicas: 1 revisionHistoryLimit: 1 selector: matchLabels: app: dify-sandbox strategy: rollingUpdate: maxSurge: 50% maxUnavailable: 0 type: RollingUpdate template: metadata: labels: app: dify-sandbox spec: automountServiceAccountToken: false imagePullSecrets: - name: public-docker-registry containers: - name: dify-sandbox image: registry.xxx.com/dify-sandbox:0.2.10 env: - name: API_KEY value: "ZHN7IOQKwvHSNqshQnADT2CwfGL1vAw8twXF+yucVlAeVBsU9fU0/6Zr" - name: GIN_MODE value: release volumeMounts: - name: sandbox-config mountPath: /conf/ resources: requests: cpu: 200m memory: 256Mi limits: cpu: 500m memory: 1Gi ports: - containerPort: 8194 imagePullPolicy: IfNotPresent volumes: - name: sandbox-config configMap: name: sandbox-config --- apiVersion: v1 kind: Service metadata: name: dify-sandbox namespace: dify spec: ports: - port: 8194 targetPort: 8194 protocol: TCP name: dify-sandbox type: ClusterIP clusterIP: None selector: app: dify-sandbox- 执行部署

# 需提前准备 imagePullSecrets kubectl apply -f configmap-sandbox.yaml kubectl apply -f deployment-sandbox.yaml注意事项

1、以上配置中,凡涉及连接其他服务的配置,均使用它们的 Service.name,如 http://dify-ssrf:3128 的 dify-ssrf 2、configmap 中的配置做了相应调整,请根据己方实际需求配置 max_workers: 15 max_requests: 100 worker_timeout: 15 3、replicas: 1 实例数量请根据实际调整 4、确保 API_KEY 是正确的,否则界面使用模型会提示 401 没权限的报错

6.7.2 Ssrf

-

操作如下

configmap-ssrf.yaml

apiVersion: v1 kind: ConfigMap metadata: name: ssrf-proxy-config namespace: dify data: squid.conf: | acl localnet src 0.0.0.1-0.255.255.255 # RFC 1122 "this" network (LAN) acl localnet src 10.0.0.0/8 # RFC 1918 local private network (LAN) acl localnet src 100.64.0.0/10 # RFC 6598 shared address space (CGN) acl localnet src 169.254.0.0/16 # RFC 3927 link-local (directly plugged) machines acl localnet src 172.16.0.0/12 # RFC 1918 local private network (LAN) acl localnet src 192.168.0.0/16 # RFC 1918 local private network (LAN) acl localnet src fc00::/7 # RFC 4193 local private network range acl localnet src fe80::/10 # RFC 4291 link-local (directly plugged) machines acl SSL_ports port 443 acl Safe_ports port 80 # http acl Safe_ports port 21 # ftp acl Safe_ports port 443 # https acl Safe_ports port 70 # gopher acl Safe_ports port 210 # wais acl Safe_ports port 1025-65535 # unregistered ports acl Safe_ports port 280 # http-mgmt acl Safe_ports port 488 # gss-http acl Safe_ports port 591 # filemaker acl Safe_ports port 777 # multiling http acl CONNECT method CONNECT http_access deny !Safe_ports http_access deny CONNECT !SSL_ports http_access allow localhost manager http_access deny manager http_access allow localhost http_access allow localnet http_access deny all ################################## Proxy Server ################################ http_port 3128 coredump_dir /var/spool/squid refresh_pattern ^ftp: 1440 20% 10080 refresh_pattern ^gopher: 1440 0% 1440 refresh_pattern -i (/cgi-bin/|?) 0 0% 0 refresh_pattern /(Packages|Sources)(|.bz2|.gz|.xz)$ 0 0% 0 refresh-ims refresh_pattern /Release(|.gpg)$ 0 0% 0 refresh-ims refresh_pattern /InRelease$ 0 0% 0 refresh-ims refresh_pattern /(Translation-.*)(|.bz2|.gz|.xz)$ 0 0% 0 refresh-ims refresh_pattern . 0 20% 4320 # upstream proxy, set to your own upstream proxy IP to avoid SSRF attacks # cache_peer 172.1.1.1 parent 3128 0 no-query no-digest no-netdb-exchange default ################################## Reverse Proxy To Sandbox ################################ http_port 8194 accel vhost # Notice: # default is 'sandbox' in dify's github repo, here is 'dify-sandbox' because the service name of sandbox is 'dify-sandbox' # you can change it to your own service name cache_peer dify-sandbox parent 8194 0 no-query originserver acl src_all src all http_access allow src_all --- apiVersion: v1 kind: ConfigMap metadata: name: ssrf-proxy-entrypoint namespace: dify data: docker-entrypoint-mount.sh: | #!/bin/bash # Modified based on Squid OCI image entrypoint # This entrypoint aims to forward the squid logs to stdout to assist users of # common container related tooling (e.g., kubernetes, docker-compose, etc) to # access the service logs. # Moreover, it invokes the squid binary, leaving all the desired parameters to # be provided by the "command" passed to the spawned container. If no command # is provided by the user, the default behavior (as per the CMD statement in # the Dockerfile) will be to use Ubuntu's default configuration [1] and run # squid with the "-NYC" options to mimic the behavior of the Ubuntu provided # systemd unit. # [1] The default configuration is changed in the Dockerfile to allow local # network connections. See the Dockerfile for further information. echo "[ENTRYPOINT] re-create snakeoil self-signed certificate removed in the build process" if [ ! -f /etc/ssl/private/ssl-cert-snakeoil.key ]; then /usr/sbin/make-ssl-cert generate-default-snakeoil --force-overwrite > /dev/null 2>&1 fi tail -F /var/log/squid/access.log 2>/dev/null & tail -F /var/log/squid/error.log 2>/dev/null & tail -F /var/log/squid/store.log 2>/dev/null & tail -F /var/log/squid/cache.log 2>/dev/null & # Replace environment variables in the template and output to the squid.conf echo "[ENTRYPOINT] replacing environment variables in the template" awk '{ while(match($0, /${[A-Za-z_][A-Za-z_0-9]*}/)) { var = substr($0, RSTART+2, RLENGTH-3) val = ENVIRON[var] $0 = substr($0, 1, RSTART-1) val substr($0, RSTART+RLENGTH) } print }' /etc/squid/squid.conf.template > /etc/squid/squid.conf /usr/sbin/squid -Nz echo "[ENTRYPOINT] starting squid" /usr/sbin/squid -f /etc/squid/squid.conf -NYC 1deployment-ssrf.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: dify-ssrf namespace: dify labels: app: dify-ssrf spec: selector: matchLabels: app: dify-ssrf replicas: 1 strategy: rollingUpdate: maxSurge: 50% maxUnavailable: 0 type: RollingUpdate template: metadata: labels: app: dify-ssrf spec: imagePullSecrets: - name: public-docker-registry containers: - name: dify-ssrf image: registry.xxx.com/ubuntu/squid:6.6-24.04_edge env: - name: HTTP_PORT value: "3128" - name: COREDUMP_DIR value: "/var/spool/squid" - name: REVERSE_PROXY_PORT value: "8194" - name: SANDBOX_HOST value: "dify-sandbox" - name: SANDBOX_PORT value: "8194" resources: requests: cpu: 200m memory: 400Mi limits: cpu: 1000m memory: 1Gi ports: - containerPort: 3128 name: dify-ssrf volumeMounts: - name: ssrf-proxy-config mountPath: /etc/squid/ - name: ssrf-proxy-entrypoint mountPath: /tmp/ command: [ "sh", "-c", "cp /tmp/docker-entrypoint-mount.sh /docker-entrypoint.sh && sed -i 's/r$$//' /docker-entrypoint.sh && chmod +x /docker-entrypoint.sh && /docker-entrypoint.sh" ] volumes: - name: ssrf-proxy-config configMap: name: ssrf-proxy-config - name: ssrf-proxy-entrypoint configMap: name: ssrf-proxy-entrypoint restartPolicy: Always --- apiVersion: v1 kind: Service metadata: name: dify-ssrf namespace: dify spec: selector: app: dify-ssrf ports: - protocol: TCP port: 3128 targetPort: 3128- 执行部署

# 需提前准备 imagePullSecrets kubectl apply -f configmap-ssrf.yaml kubectl apply -f deployment-ssrf.yaml注意事项

1、以上配置中,凡涉及连接其他服务的配置,均使用它们的 Service.name,如 configmap 和 deployment 中的 dify-sandbox 2、replicas: 1 实例数量请根据实际调整

6.7.3 后端调整

-

操作如下

- Api 调整

deployment_demo.yaml如下

# 删除变量 BASE_HOST - name: BASE_HOST value: 192.168.26.23 # 替换变量 BASE_HOST - name: CODE_EXECUTION_ENDPOINT value: http://dify-sandbox:8194 - name: SSRF_PROXY_HTTP_URL value: 'http://dify-ssrf:3128' - name: SSRF_PROXY_HTTPS_URL value: 'http://dify-ssrf:3128'- Worker 调整

deployment_demo.yaml如下

# 删除变量 BASE_HOST - name: BASE_HOST value: 192.168.26.23 # 替换变量 BASE_HOST - name: SSRF_PROXY_HTTP_URL value: 'http://dify-ssrf:3128' - name: SSRF_PROXY_HTTPS_URL value: 'http://dify-ssrf:3128' - Api 调整